Making Sense of Judging Feedback

By Greig McGill

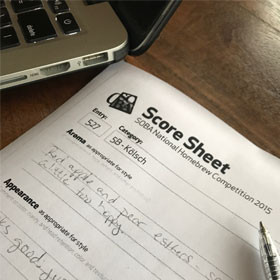

Having been on both sides of the scoresheet, so to speak, I thought it could be useful to create a practical guide to interpreting what can occasionally be cryptic data. Interpreting this data correctly can be the key to making much better beer, or simply performing better in competitions.

Firstly, let me explain that judges don’t try to be cryptic or obscure when they fill out a scoresheet. They are simply constrained by having no certainty about what’s in their glass. They cannot, therefore, make specific comments about ingredients such as “perhaps consider not using Columbus as a dry hop in such a delicate beer as it dominates and destroys the balance”. Also, as I’ve mentioned previously, judging beer is a fatiguing process. The quality of the feedback you receive may relate to the order it appeared in a flight, or the quality of the beers around it. This is unfortunately “just one of those things”, and while competition organisers do their best to ensure each beer gets a fair shake of the stick, there will always be beers which are less scrupulously judged than others.

Secondly, I’m mainly talking about BJCP style judging sheets here, but the information provided is fairly general and should be able to be applied to almost any style of formal competition.

OK, with the excuses out of the way, here are some tips on how you can interpret the information you get back on your scoresheet, ordered by the way a beer is usually judged and not necessarily the order of criteria on the sheet itself.

Appearance

Appearance is simple from a descriptor point of view, but harder when trying to tie it to a score. Judges have different tolerances for haze. Unless I could read a newspaper through the sample, you will probably get a “slight haze” remark from me. Judges will sometimes even shine a torch through the beer to see if there’s much diffusion from haze! In most cases, judges shouldn’t really dock any points for very light haze unless the beer style says clarity should be brilliant, but some may. It might also be used as an “adjustor”. I’ll come back to that later. In general, you won’t get much comment here. It usually comes down to adherence to style, and general visual appeal. Negative terms such as “murky”, or “dirty” probably imply the judge finds it personally displeasing even if it doesn’t necessarily fall foul in the points department. Due to the nature of serving at competitions, the thing you can probably pay the least attention to here is any comments relating to head retention. “Head fades quickly” can be a product of competition conditions. If you get comments such as “dead flat” or “unable to rouse a head”, it might be worth looking at your process – in particular carbonation of your beer and protein content of your wort, or any adjuncts which may impact this.

Aroma

Aroma is often the “busiest” field on the scoresheet. Most of what we perceive as flavour is actually aroma, and as such you will often see many descriptors in the aroma field and a simple “as per aroma” in the flavour field. Judges should really be commenting just on adherence to style, and appropriateness of aromatics detected, but will often get carried away and get a little descriptive. You can largely ignore that. There should really just be notes about aromatics which are out of place in the style, or are generally too low or too high in intensity. The score should reflect this. Aromatic faults such as DMS and acetaldehyde will show up here, and other faults may also be evident. Often if they are subtle (light contamination) or at low levels but have an associated mouthfeel or flavour component (low VDK/diacetyl) these may escape notice here.

Flavour

As noted above, flowery descriptors here are out of place, but often occur, especially in the case of very good or very bad beers. What should be here, as with aroma, are notes on appropriateness to style and anything that adds to or detracts from the score provided. Look for adverbs like “very” or “extremely” to understand the impact of any note on your score. This is also where you’ll get feedback on potential contamination or beer fault issues that may have been covered by hops, esters, or other aromatics in the aroma.

Mouthfeel

Mouthfeel is where you’ll get the majority of your feedback on carbonation, any astringency, appropriateness of body, and any tactile faults such as diacetyl slickness. As with appearance, this is often a sparsely populated field. It could be as little as “light”. That said, it’s usually the simplest to interpret as most mouthfeel issues have simple causes and remedies. It’s also rare for mouthfeel alone to significantly impact a beer’s score if all else is in line with the style unless it’s a particularly egregious issue.

Overall

This is usually the “catchall” field for any comments the judges wish to make to summarise the beer and send a message to the brewer. It may contain brewing advice, where a fault is obviously caused by poor technique. It is often used to justify the score provided, and what the brewer would need to do to achieve a higher score next time.

General

Many brewers get disheartened when they receive a mediocre score and very little commentary on a sheet. This is actually good data. It means that your beer was average – to style generally, but just not a great example, or without much to comment on. If that’s the case, you’re on the right track to become a great brewer and just need to work on your “X factor” to make your beers a little more exciting – freshness, hop selection, fermentation management to get the most from your yeast, etc. If a category scores at roughly 50% (never lower) without more than an “OK” comment, then the judges simply had nothing negative to say, but nor were they wowed by the beer. If it scores lower than 50% in any category, notes should always be provided, even if very brief.

If scores seem oddly out of proportion to comments given, and you’re just inside or outside a medal boundary, there may have been an adjustment factor applied. That is to say, the beer was scored individually by component, and when the total scores were added up, the judges didn’t feel that the final score given accurately reflected the qualities of the beer. When this happens, often they will adjust one or more categories up or down slightly to more closely give the score they think should send the correct message to the brewer. Look for this especially when medals are just shy of the next one up – for example, a bronze medal that would have been silver but for one more point. Often there should be a comment explaining this, but not always.

Hopefully that provides some insight into what judges write on sheets, why they can be frugal with words, and what you can infer about the relationship between comments made and scores provided. It can be a useful technique to take samples of your beer, along with the judge feedback, and share it with fellow brewers in a critical session and see if the comments line up. It’s important to remember that even if you disagree, the judges could only comment on what was in their sample glass on the day. Beer is a moving target, and it can be frustrating to pay good money to enter a competition only to receive feedback that seemingly describes another beer! Judges hate when that happens too. Sometimes all you can do is pour yourself a beer and plot your next attempt!